Data science interviews are complex, sometimes unpleasant, and can freak out even the strongest and the most experienced candidates. The field is broad and spans everything from statistics and machine learning to coding and business acumen.

You may be asked about all of these areas — statistics and probability, data structures and algorithms, ML algorithms, deep learning algorithms, concepts related to natural language processing, vision, time series, recommendation systems, and clustering.

On top of that, data science is a very competitive field. You’ll need a systematic approach and we’re here to help you nail everything.

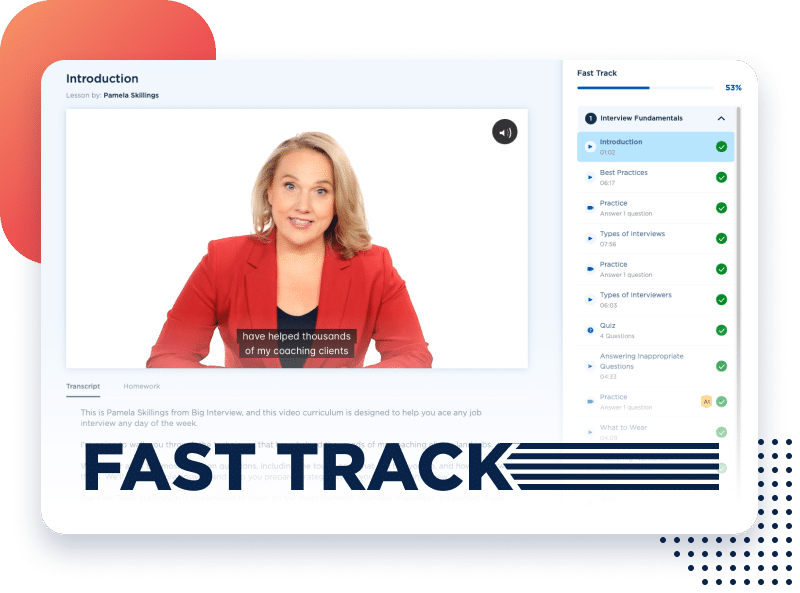

Want to get an offer after every interview? Our interview preparation tool will guide you through all the questions you can expect, let you record and analyze your answers, and provide instant AI feedback. You’ll know exactly what to improve to turn your next interview into a job.

Don’t waste days compiling overused interview techniques. Get original answers to every single question you could expect.

Here’s a quick breakdown of what you need to know to do well in a data scientist interview:

- A data science interview can take several rounds and the questions may get more technical as candidates get shortlisted.

- Apart from the key technical skills, the interviewers also want to hire someone who has the right mix of soft skills (like communication) and the ability to explain model results to non-tech stakeholders.

- You’ll get several scenario-based questions or questions where you need to explain how you solved a problem or accomplished a difficult task in the past. For these questions, it’s important to choose relevant examples and tell a story in a structured way that highlights tangible results.

To help you nail your next data science interview, we’ll cover.

- 17 essential data science interview questions that cover the core areas you can expect

- Some sample answers and tips on how to approach the answers

- Top tech skills and 6 frequently asked questions

What Makes Data Science Interviews Different

When interviewing for data science roles, you should expect a thorough assessment of both your technical skills and your ability to derive actionable insights from data.

Data science interviews often incorporate live coding tests, data manipulation tasks, and on-the-spot statistical problem-solving. You may be asked to write code in Python or R, use SQL for data retrieval, or apply machine learning algorithms during the interview (this will depend on the specific role in question). You may also get a data challenge to complete at home.

But it’s not all about the results. The interviewers also want to know about your thought process. When approaching data-related problems, you’ll typically need to walk the interviewer through your methods of data exploration, hypothesis building, testing, and interpretation.

Finally, you need to show that you understand the role of data science within a broader business context. Your work will directly impact business decisions, so the interview may involve real-life scenario-based questions where you should explain how you would use data to tackle business problems, forecast company needs, or improve processes.

Here are the most common interview questions you’ll be asked in a data science interview*:

- Tell me about your experience with data science.

- What made you want to become a data scientist?

- What programming languages are you proficient in?

- Can you describe your process for data cleaning and preparation?

- Describe a time you derived a valuable insight from a data set.

- Tell me about a challenging data project and how you handled it.

- How do you continue learning and improving your data science skills?

- Describe a project where you needed to learn a new tool or technology quickly. How did you manage?

- Can you share an example where you had to work with extremely large datasets? What challenges did you face and how did you overcome them?

- How do you handle missing or corrupted data in your dataset?

- What approach would you take to explain a technical model to a non-technical stakeholder?

- Suppose you are working on a model that is underperforming, what steps do you take to diagnose the problem and potentially improve the model’s performance?

- If given a dataset from a department you’re not familiar with, how would you proceed with extracting actionable insights?

- What models have you built that you are most proud of, and why?

- Can you discuss your experience with real-time data processing and its implementation in previous projects?

- What experience do you have with integrating machine learning models into production systems?

- Explain how you have used data visualization to influence business decisions?

*This piece covers the nuances of data science interviewing and the most important types of questions from different categories. For in-depth engineering questions (including super detailed whiteboard questions walkthroughs), check out TestGorilla or Interviewing.io. We love these two resources, and we’re sure you’ll find them useful.

Common Data Scientist Interview Questions and Sample Answers

Basic Interview Questions for Data Scientists

Tell me about your experience with data science.

Sample answer:

I’m currently working as a Senior Data Scientist at Proofpoint, and have a strong background in Python, SQL, and machine learning. My career started in a more traditional data analyst role for a data archiving company, but I quickly moved into data science because I saw how much more impact my work could have by using data-driven models and automation, especially because of the nature of the industry and how much data we had accumulated.Over the years, I’ve led projects that involve everything from exploratory data analysis and feature engineering to model development and deployment. I’m well-versed in applying machine learning techniques like regression, classification, and clustering, and I’m always exploring the latest advancements in deep learning and MLOps.

One of my strengths is translating complex business problems into data science solutions, ensuring that the insights we generate are actionable and aligned with company strategy and goals.

Tips on how to answer:

- Start strong — “Tell me about yourself” or a similar variation is usually the first question you get. Introduce yourself and talk about your past work experience, key responsibilities, and skills. Ideally, mention your relevant past achievements and explain how your strengths can contribute to the company.

- Get specific — Mention specific tools, languages like Python or SQL, and techniques like machine learning, regression analysis, neural networks, anomaly detection, and data visualization — all aligned with the role you’re interviewing for.

- Keep your answer concise and structured — Don’t rehash your entire resume. Focus on your professional life, and make sure your answer has a clear beginning, middle, and end.

What made you want to become a data scientist?

Sample answer:

It started with a love for problem-solving and a fascination with data. Early in my career, I was working in a role that required a lot of manual data processing and analysis. I quickly realized that if I used programming and statistical methods, I could automate tasks and learn about patterns and business insights that were otherwise hidden in the data.The more I learned, the more I became captivated by the power of data to drive decisions, whether it’s improving business processes, optimizing products, or predicting future trends. I was particularly drawn to the intersection of data and machine learning, where I could build models that not only explain the past but also forecast the future.

I like it because it’s a perfect blend of analytical rigor, creativity, and the ability to make a real, tangible impact. The field is constantly evolving too. This keeps me excited and motivated to keep learning.

Tips on how to answer:

- Show passion and drive — The interviewers want to see your skills and the passion for the role, so make sure to mention your natural curiosity and problem-solving.

- Highlight how you used data to solve a complex issue.

- Link your past experiences with your current career goals — Explain how becoming a data scientist aligns with your desire to continuously learn, tackle complex challenges, and contribute to meaningful projects that have real-world impact.

Which programming languages are you proficient in?

Sample answer:

I’m great at Python, which is my go-to language because of its extensive libraries like Pandas, NumPy, and Scikit-learn. I use Python for everything from data wrangling and analysis to ML model development. I’m also strong in SQL, which I use regularly for querying and managing databases, especially when working with large datasets.I’m comfortable with R for statistical analysis, though I primarily use Python. I’ve also dabbled in Java and C++ for more computationally intensive tasks, though these are less common in my day-to-day work. Beyond that, I have experience with shell scripting and basic knowledge of MLOps tools, which ties into deploying models in production environments.

I’m trying to stay versatile and adapt my programming skills to the needs of the specific project, whether it’s building machine learning models, automating data pipelines, or integrating with APIs.

Tips on how to answer:

- Aim for a targeted answer — Focus on the languages most relevant to the position you’re applying for, such as Python, R, and SQL, explain when and to which extent you’ve used them, and try to match them with the requirements from the job description. Highlight your experience with key libraries and tools associated with each language.

- Show you’re adaptable — If you’re less familiar with a required language, show them that you can pick up a new language (it’s best to give a specific example when you learned a new language, model, or tool in a previous role).

Can you describe your process for data cleaning and preparation?

Sample answer:

I typically start by understanding the dataset — its source, structure, and the type of data it contains. The first step is to handle missing data, which might involve imputation, removal, or flagging, depending on the context. Then, I look for inconsistencies, such as duplicates, incorrect data types, or outliers, and address them through normalization, transformation, or filtering.Next, I standardize formats across the dataset, ensuring uniformity in things like dates, units, and categorical variables. I also consider feature engineering if I see opportunities to create new variables that could add value to the model. Throughout the process, I document my steps carefully, so I have a clear audit trail and can reproduce the results if needed.

Tips on how to answer:

- Show that you have a structured approach — Explain your process step by step and highlight the tools and techniques you use.

- Go beyond theory — Provide examples of how you applied these in past projects to ensure data quality.

Behavioral Interview Questions for Data Scientists

These questions aim to understand how you handled situations in the past and test your problem-solving skills, teamwork, and overall work ethic. Here are some common ones:

Describe a time you derived a valuable insight from a data set.

Sample answer:

In a previous role at a telecom company, I tackled the challenge of boosting sign-ups and retaining customers. We had tons of customer and market data to sift through.I noticed that many customers were exceeding their data limits, leading to extra fees and frustration, while others barely used their data, making them potential switchers to cheaper competitors.

I applied clustering algorithms to segment customers by phone usage, behavior, and preferences. Then, I used predictive modeling to identify those most likely to leave. This helped us create targeted, affordable plans and perform a price elasticity analysis to find the optimal pricing.

We revamped our plans, introduced data tiers, and set up in-app alerts to prevent overage surprises. We also adjusted some existing plan prices.

The results were impressive: more new customers, higher retention, and a 24% revenue increase within 6 months.

Tips on how to answer:

- Provide context — Briefly introduce the project, its goals, and challenges to set the stage.

- Detail your analytical process — Explain the specific steps and processes you took to explore the data, identify patterns, and formulate hypotheses.

- Quantify the impact — Use concrete numbers and metrics to show the tangible results of your insight.

- Show initiative and collaboration — Highlight your proactive approach to problem-solving and your ability to work effectively with cross-functional teams.

Tell me about a challenging data project and how you handled it.

Sample answer:

I tried a few different things. I tried resampling techniques, but these either introduced bias or resulted in information loss. Then, I tried SMOTE to generate synthetic samples of the minority class and balance the dataset without compromising its integrity.I also focused on feature engineering and worked closely with the fraud experts to figure out what really sets a dodgy transaction apart. We looked at things like how fast someone was spending money, unusual spending patterns, or geographical anomalies.For this, I used ensemble learning, combining multiple models like decision trees, random forests, and gradient boosting to enhance predictive power.Throughout the project, I made sure the executive team understood what we were doing and why. I used visuals to show how well our model was working.

Tips on how to answer:

- Clearly define the challenge — Explain the specific technical or data-related obstacle you faced in a way that’s easy to understand.

- Showcase your technical skills — Describe the specific techniques, algorithms, and tools you used to overcome the challenge. For example, mention libraries like imblearn in Python for implementing SMOTE.

- Highlight problem-solving and adaptability — Demonstrate your ability to think critically, experiment with different approaches, and adjust your strategies as needed.

- Emphasize collaboration and communication — Show how you worked effectively with domain experts and stakeholders, and go through technical concepts clearly and concisely.

- Conclude with specific results — If possible, provide concrete metrics to show the positive business outcomes of your efforts.

How do you continue learning and improving your data science skills?

Sample answer:

For a personal project, I recently built a recommendation system to suggest books based on user preferences. I used Goodreads’ API and applied matrix factorization to predict users’ preferences based on similar users’ ratings. I used RMSE as the key metric and tuned hyperparameters to minimize the error.

I also think it’s really important to be part of the data science community, so I’m pretty active on Reddit or GitHub.

Tips on how to answer:

- Emphasize a proactive learning approach — Highlight how you take the initiative to learn new things even if it’s not an explicit job requirement. Mention personal projects, real-life applications of data science, or how you apply skills in your current role.

- Accentuate community engagement — Talk about how you connect with other data scientists.

- Illustrate your love of challenges — Show your willingness to tackle tough problems and step outside your comfort zone.

Describe a project where you needed to learn a new tool or technology quickly. How did you manage?

Sample answer:

At my last job, we decided to switch over to Tableau for our data visualization needs. I was pretty comfortable with analyzing data and writing code, but Tableau was new territory for me. I knew I had to learn it fast, so I took the initiative.I signed up for an online course and spent evenings and weekends getting to grips with the software. I also tried to find any opportunity to practice what I was learning. I volunteered to make visualizations for upcoming presentations.

Within a few weeks, I was creating interactive dashboards and really insightful visualizations that we used in meetings and presentations with stakeholders.

Tips on how to answer:

- Discuss the business impact — Whenever possible, briefly mention how your quick learning contributed to positive outcomes for the project or company.

- Connect to other skills — Show how your ability to learn new tools complements your existing skills in data analysis, scripting, and statistical techniques.

- Show enthusiasm — You want to show that you’re open and excited about new technologies.

Can you share an example where you had to work with extremely large datasets? What challenges did you face, and how did you overcome them?

Sample answer:

Last year, I built a personalized recommendation engine for a big e-commerce platform called Shoppy. We were dealing with an enormous amount of data — billions of user interactions and product details. This presented major issues in terms of how to store, process, and train our models on such a massive scale.We decided to use AWS EMR and distributed computing frameworks like Apache Spark. We also had to simplify the data and streamline our processes, so we could train our models faster.

In the end, we managed to handle the dataset effectively and build a recommendation system that really worked. We saw a noticeable increase in how users interacted with the platform, and sales went up by 9% too.

Tips on how to answer:

- Highlight technical proficiency — Showcase your experience with big data tools and techniques. Mention specific technologies and frameworks you’ve used.

- Focus on problem-solving — Explain the specific challenges you encountered due to the size and complexity of the dataset, and detail how you overcame them.

Read our in-depth guide on how to answer behavioral interview questions.

Situational Interview Questions for Data Scientists

Suppose you are working on a model that is underperforming, what steps do you take to diagnose the problem and potentially improve the model’s performance?

Sample answer:

I was tasked with building a classification model to predict customer churn for a telecom company. Initially, the model underperformed, with an F1 score of 0.58, lower than expected. I started by performing an in-depth analysis of the dataset, checking for data imbalance, missing values, and outliers. I found that the dataset had a class imbalance, with churned customers making up only 10% of the total, which negatively impacted precision and recall for the minority class.Next, I evaluated the model’s performance by examining confusion matrices and classification reports to pinpoint issues with specific classes. I saw that the model struggled to correctly predict the minority class, and it also exhibited signs of overfitting. The training accuracy was 92%, but validation accuracy was only 68%.

To address this, I applied SMOTE to balance the dataset, optimized hyperparameters like learning rate and regularization strength through grid search, and revisited feature selection by engineering new features such as customer tenure and call drop rate. I also tested different algorithms, ultimately switching from a decision tree to a random forest model.

After retraining the model with balanced data and tuned parameters, the F1 score improved to 0.72. I documented each iteration and result to ensure reproducibility and transparency, which allowed me to refine the model efficiently and improve its performance.

Tips on how to answer:

- Showcase a systematic approach — Show that you follow a structured process to diagnose and improve underperforming models. This could include steps like data validation, error analysis, and experimentation with different techniques.

- Underscore adaptability and persistence — Communicate your ability to adapt your approach when initial solutions don’t bring the desired results.

If given a dataset from a department you’re not familiar with, how would you proceed with extracting actionable insights?

Sample answer:

I’d start by getting curious and reaching out to people in that department to understand the context of the data. What kind of problems are they trying to solve? What information is important to them? Let me give an example.In a project where I was tasked with analyzing customer support data for an e-commerce company, I started by meeting with the customer service team to gather context. I focused on understanding their KPIs, like average response time, customer satisfaction, and resolution rate, to align my analysis with their specific objectives.

Next, I performed exploratory data analysis (EDA) on the dataset, which included variables like ticket timestamps, customer ratings, and agent performance metrics. I cleaned the data by addressing missing values in the customer rating field and normalizing timestamps to ensure consistency.

I then used data visualization techniques, such as histograms and box plots, to identify trends and outliers in ticket resolution times. I also applied clustering algorithms to segment customers based on interaction frequency and satisfaction scores. One key pattern I noticed was that high-resolution times were strongly correlated with low customer satisfaction scores, especially for VIP customers.

After identifying these insights, I conducted follow-up discussions with the customer service team to lay out my findings and discuss potential process improvements, such as prioritizing VIP tickets and reassigning agents based on performance data.

Tips on how to answer:

- Explain your approach— Convince the interviewer you know what to do even when you encounter an unexpected scenario.

- Show you’re proactive — Reaching out to others and seeking guidance demonstrates your willingness to collaborate and do all it takes to solve a problem.

Check out our dedicated guide to learn how to approach situational interview questions.

Role-specific Interview Questions for Data Scientists

These questions specifically target your skills and experience as a data scientist. The exact nature of these questions can vary significantly depending on the industry, company, and specific role you’re applying for. To prepare for this part of the interview, thoroughly research the company and position.

We’ll present a few sample questions to give you a sense of what you can anticipate:

What models have you built that you are most proud of, and why?

Sample answer:

I’m particularly proud of being involved in building a model to predict patient medication adherence for a healthcare provider. The dataset included over 100,000 patient records, prescriptions, demographic data, and socioeconomic factors. The complexity of the problem lay in the fact that adherence isn’t driven solely by medical factors. Variables like income, education level, and lifestyle habits played a significant role.To address this, I built a series of models: starting with logistic regression for baseline comparisons, then moving to more complex algorithms like random forests and gradient boosting. After feature engineering, I included variables such as appointment frequency, prescription refill history, and patient proximity to healthcare facilities. I also used one-hot encoding to handle categorical variables, such as insurance type and education level.

The random forest model performed best, with an AUC of 0.81, indicating strong predictive power. I did feature importance analysis, which highlighted socioeconomic factors like income and education as key drivers of non-adherence, alongside traditional medical indicators.

What made this project stand out was its practical impact. The model was deployed into the provider’s system, allowing healthcare professionals to identify high-risk patients and intervene with targeted support, such as personalized education or reminders.

Tips on how to answer:

- Choose a relevant and impactful project — Select a model that aligns with the company’s industry or focus and highlight its positive impact on business or the greater good.

- Demonstrate technical depth — This is the part where your tech expertise should shine through.

- Don’t neglect soft skills — Besides tech skills, a data scientist role also requires good communication, collaboration, and presentation skills.

Can you discuss your experience with real-time data processing and its implementation in previous projects?

Sample answer:

In a previous role at a tech company, I worked on developing a real-time anomaly detection system for server infrastructure, aimed at preventing service disruptions. The system needed to process high-velocity log data, with tens of thousands of events per second.My team and I built a streaming data pipeline using Apache Kafka to handle data ingestion and Apache Spark Streaming for real-time analysis. Kafka acted as the message broker, ensuring efficient and scalable data flow, while Spark Streaming performed distributed processing to detect anomalies in server metrics, such as CPU usage spikes or unusual network traffic.

One of the main challenges was ensuring low-latency processing while managing the large volume of data. To solve this, I optimized the Spark jobs by fine-tuning batch intervals and applying window functions to analyze data in small time frames. I also used horizontal scaling to distribute the load across multiple nodes in the cluster, ensuring high throughput.

We implemented an automated alerting system, integrated with tools like Prometheus and Grafana, to notify engineers of any detected anomalies in real time. This system significantly reduced downtime, as it allowed us to preemptively address issues before they escalated.

Tips on how to answer:

- Focus on the “real-time” aspect — Emphasize the need to process and analyze data as it arrives rather than in batches. This conveys your understanding of the complexities involved in handling streaming data.

- Quantify the scale — If possible, mention the volume and velocity of data you dealt with to illustrate the size of the challenge you had to solve.

What experience do you have with integrating machine learning models into production systems?

Sample answer:

In a recent role at a SaaS company, I integrated a machine learning model for lead scoring into the company’s CRM system. The goal was to prioritize high-value leads based on behavior and demographic data and improve sales team efficiency. I developed the model using XGBoost, which outperformed other algorithms in cross-validation, achieving an AUC of 0.87.For deployment, I collaborated with the DevOps team to containerize the model using Docker for portability. We used Kubernetes to orchestrate the deployment, allowing for scalable, fault-tolerant operation. This setup ensured the model could handle real-time scoring without performance bottlenecks.

To maintain performance and reliability, I implemented Prometheus and Grafana to track key metrics such as prediction latency and accuracy over time. I also set up an alert system for data or concept drift, ensuring we could react quickly to any degradation in model performance.

We integrated an automated pipeline for retraining the model on a weekly basis using fresh lead data, keeping the model updated and relevant. This retraining process was managed using MLOps best practices, with continuous integration/continuous delivery (CI/CD) pipelines to streamline deployment and model updates.

As a result, the sales team saw a 15% increase in lead conversion rates, and the CRM system became more efficient in prioritizing high-value prospects.

Tips on how to answer:

- Emphasize MLOps experience — Demonstrate your understanding of the entire lifecycle of a machine learning model, from development to deployment and monitoring.

- Mention specific tools and technologies — Showcase your proficiency with relevant tools and frameworks, such as Docker, Kubernetes, and CI/CD pipelines.

- Focus on collaboration — Explain how you worked with different teams, such as engineering, product, or customer success, to ensure successful integration.

- Emphasize monitoring and maintenance — Show that you understand the importance of continuous model evaluation and improvement in a production environment.

Explain how you have used data visualization to influence business decisions.

Sample answer:

In my previous role, I optimized a complex supply chain network for a global retailer. The data included inventory levels, supplier performance metrics, transportation costs, and customer demand forecasts, but it was impossible to derive actionable stuff from this raw data.To address this, I developed interactive dashboards using Tableau to visualize key supply chain metrics. One of the primary tools was a heatmap that displayed real-time inventory distribution across multiple warehouses. This helped us immediately spot regions with overstock and understock issues. After analyzing these patterns, I implemented a more dynamic stock replenishment strategy, reducing carrying costs by 15%.

In addition, I created line graphs and bar charts to track supplier lead times and on-time delivery rates. By visualizing historical trends, we identified suppliers with consistently long delays. This led to renegotiating contracts with underperforming suppliers, which improved overall delivery times and reduced transportation costs.

The visualizations were shared across departments, helping stakeholders understand the current state of the supply chain and enabling data-driven decisions.

Tips on how to answer:

- Highlight the “aha” moment — Briefly describe how a particular visualization helped stakeholders gain a new understanding or perspective on a complex issue.

- Employ storytelling — Structure your response as a narrative, highlighting the problem, your solution (data visualization), and the positive outcome. This will make your answer more engaging and memorable.

- Show you can speak the stakeholders’ language — Don’t stop at the technical outcomes, but mention things like cost reductions, lowered churn, and saving company money. This is what most CEOs and board members care about, so make sure your impact translates to the outcomes they value.

According to the U.S. Bureau of Labor Statistics, data scientist positions are among the fastest-growing jobs in 2024, with a 35% predicted growth until 2032.

How to Answer Data Scientist Interview Questions

Next, we’ll discuss some general tips for acing your data scientist interview questions in a way that will impress the interviewer.

Use the STAR method

This popular method for structuring interview answers stands for Situation, Task, Action, Result, and it’s a go-to approach for behavioral and situational questions. It will allow you to organize your thoughts and stay on track when talking about a past or hypothetical situation.

The STAR formula works so well because it provides a clear and concise framework to tell a story about how you handled or would handle a particular challenge, what exactly you did to solve a problem, and what the results were.

Here’s how to structure your answer using STAR:

- Situation — Provide a context by setting the scene and giving the necessary details about the past or hypothetical event or challenge you faced.

- Task — Clearly define your specific role, responsibilities, or objectives in the given situation.

- Action — Explain the steps you took to overcome the challenge.

- Result — Talk about the positive impact of your work and, whenever possible, quantify results.

Let’s analyze one of our existing answers through the STAR lens:

Situation: While working for a tech company, I was heavily involved in building a real-time anomaly detection system for their server infrastructure. We were dealing with a massive influx of log data generated every second.

Task: Our goal was to identify any unusual patterns or potential system failures as quickly as possible to prevent service disruptions.

Action: To handle this, we built a streaming data pipeline using Apache Kafka as the message broker and Apache Spark Streaming for real-time processing. What we did was ingest the log data into Kafka topics, where Spark Streaming jobs consumed and analyzed the data in micro-batches. Besides that, we employed various techniques like statistical modeling and machine learning algorithms to identify real-time anomalies. One key challenge was managing the high velocity and volume of data while ensuring low latency in anomaly detection. To address this, we optimized our Spark Streaming jobs, fine-tuned Kafka configurations, and leveraged distributed processing to scale our system horizontally. Finally, we also implemented a robust monitoring and alerting mechanism to ensure prompt action in case of any detected anomalies.

Result: This real-time data processing approach was instrumental in proactively identifying and addressing potential system issues. This significantly reduced service downtime and improved overall system reliability.

Highlight analytical proficiency and outcomes from your data projects

When discussing your data projects, don’t just list the tools and techniques you used. Take it a step further and show how your analytical skills led to tangible results.

Be specific about the impact you made by illustrating how your work improved efficiency, reduced costs, or increased revenue. Explain how you identified new opportunities or solved a complex business problem. To back up your accomplishments, use numbers and percentages.

For example, instead of simply saying, “I built a machine learning model to predict customer churn,” you could say, “I developed a machine learning model that predicted customer churn with 85% accuracy, and enabled us to implement proactive retention strategies and reduce churn by 10% within three months.”

Remember, the interviewer wants to see how your skills translate into real-world value. By highlighting the positive outcomes of your projects, you show that you can make a meaningful contribution to their organization.

Pro tip: Based on where in the interview process you are and who’s sitting across the table (a tech person, a recruiter, or the CEO), pack your answers appropriately. If you’re talking to non-tech stakeholders, remember that the value of data science in any field comes down to how it’s leveraged — and you being able to clearly communicate it and focus on business outcomes is what can set you apart from other candidates.

Discuss particular tools or programming skills

While highlighting your analytical and problem-solving skills is crucial, don’t forget to discuss your technical toolkit, as it’s the only way to establish your tech expertise.

Data science is a hands-on field, and employers want to know you have the practical skills to get the job done. Be prepared to discuss the specific tools and programming languages you’re proficient in (more about this in the next section).

This could include:

- Programming languages: Python, R, SQL, Java, Scala, etc.

- Data visualization tools: Tableau, Power BI, Matplotlib, Seaborn, etc.

- Machine learning libraries: Scikit-learn, TensorFlow, PyTorch, Keras, etc.

- Big data technologies: Hadoop, Spark, Hive, Kafka, etc.

- Cloud platforms: AWS, Azure, GCP, etc.

Tailor your response to match the specific job description. If the role requires expertise in a particular area, be sure to emphasize your relevant skills. However, instead of simply listing tools, be prepared to explain how you’ve used them in past projects to achieve specific outcomes. This will give the interviewer an idea of what you bring to the table and how the employer can benefit from hiring you.

Key Data Science Skills to Mention in Your Answers

Here’s a list of technical data science skills you should weave in your answers for maximum impact*

Programming languages

- Python

- SQL

- R

- Java

- SAS

- JavaScript

- Hadoop

- Java

- Apache

- C++

- Ruby

Data visualization

- Tableau

- Histogram

- Microsoft Power BI

- Matplotlib

- Shiny

- Looker

- Seaborn

- Spotfire

Machine Learning

- NLP

- Deep learning

- Optimization

- Clustering

- Predictive modeling

- Feature engineering

- Linear regression

Artificial Intelligence

- PyTorch

- GPT / ChatGPT

- TensorFlow

- scikit-learn

- Keras

- Transformers

- Hugging face

- LangChain

Data Engineering

- Microsoft Azure

- Apache Spark

- Big Data

- Hadoop

- Docker

- Data pipelines

- ETL

- Data modeling

- Data governance

- NoSQL

- Data Lake

- Hive

- Apache Kafka

- Airflow

- Snowflake

- Data integration

*Not all of these skills will be relevant to all data science jobs. Always tie them back to your real knowledge and the job you’re interviewing for.

Summary of the Main Points

Here’s a condensed version to remember:

- A data science interview typically involves multiple rounds, with increasingly technical questions as you progress.

- Beyond technical expertise, interviewers are looking for candidates who can communicate clearly and explain model results to non-technical stakeholders.

- Expect scenario-based and “tell me about a time” questions, where you’ll need to describe how you solved specific problems or handled complex tasks. It’s crucial to select relevant examples and present them in a structured way that demonstrates measurable outcomes.

- For those questions, it’s best to use the STAR method to pack your stories into structured, impactful answers.

- Highlight your analytical and problem-solving skills, but don’t forget to discuss your technical toolkit.

- To get more peer-based advice on data science interviews, the DataScience subreddit is our top pick.

FAQ

What if I’m transitioning from a data analyst to a data scientist role?

If you’re transitioning to data science from a data analyst role, focus on your strong foundation and experience in data wrangling, EDA, and reaching conclusions from large data sets. Highlight projects where you applied ML and show that you value continuous learning through courses and certifications like Python or practicing on platforms like Kaggle.

What’s more important, culture-related questions or the coding part of the data science interview?

Both are important. Coding and technical skills matter because they prove you can perform the core tasks of a data scientist. This is what gets you in the door. Cultural fit is also key because it will help you be content at work and contribute effectively within the team.

I was invited to a final interview round with the CEO. Should I still expect whiteboard questions?

Yes. This can either be a specific algorithm and data structure problem, or a more abstract systems design and architecture-based questions. Overall, whiteboard questions help interviewers to both understand your problem-solving approach and technical expertise, but also how well you can articulate and justify your solutions — something that’s essential for CEOs. Make sure to bring up tangible business benefits that CEOs care about.

How to answer data science interview questions with limited work experience?

If you are a fresh grad or are just getting into data science, it’s best to focus on your academic work, personal projects, or internships where you applied data science concepts or solved real-life business problems. If you have experience in related fields like data analysis, software development, or statistics, emphasize how these skills translate to data science.

What are the toughest questions a data scientist might face?

Here are some difficult and unexpected questions you can come across in a data science interview:

- Tell me about a time you were surprised by something you found in your analysis.

- Explain the central limit theorem if we don’t assume that the random variables are identically distributed, but are still independent, including the assumption of finite second moment.

- Here’s a pseudocode for a neural net. Explain how it works, point out mistakes or inefficiencies in the architecture.

- How many table tennis balls would fit into a triple-seven?

What questions should I ask at the end of my data science interview?

- How does the data science team collaborate with other departments, like engineering or product management?

- What are some of the most exciting projects the data science team is working on right now?

- How does the company approach data-driven decision-making?

- Are there opportunities to work on cross-functional projects or explore different areas within data science?